{Trustworthy AI+CPS}

Safety Guarantees of Learning-Enabled CPS

Safety is critical in Cyber-Physical Systems (CPS) applications, including autonomous driving systems, power grids, and medical robots, and faces multiple challenges.

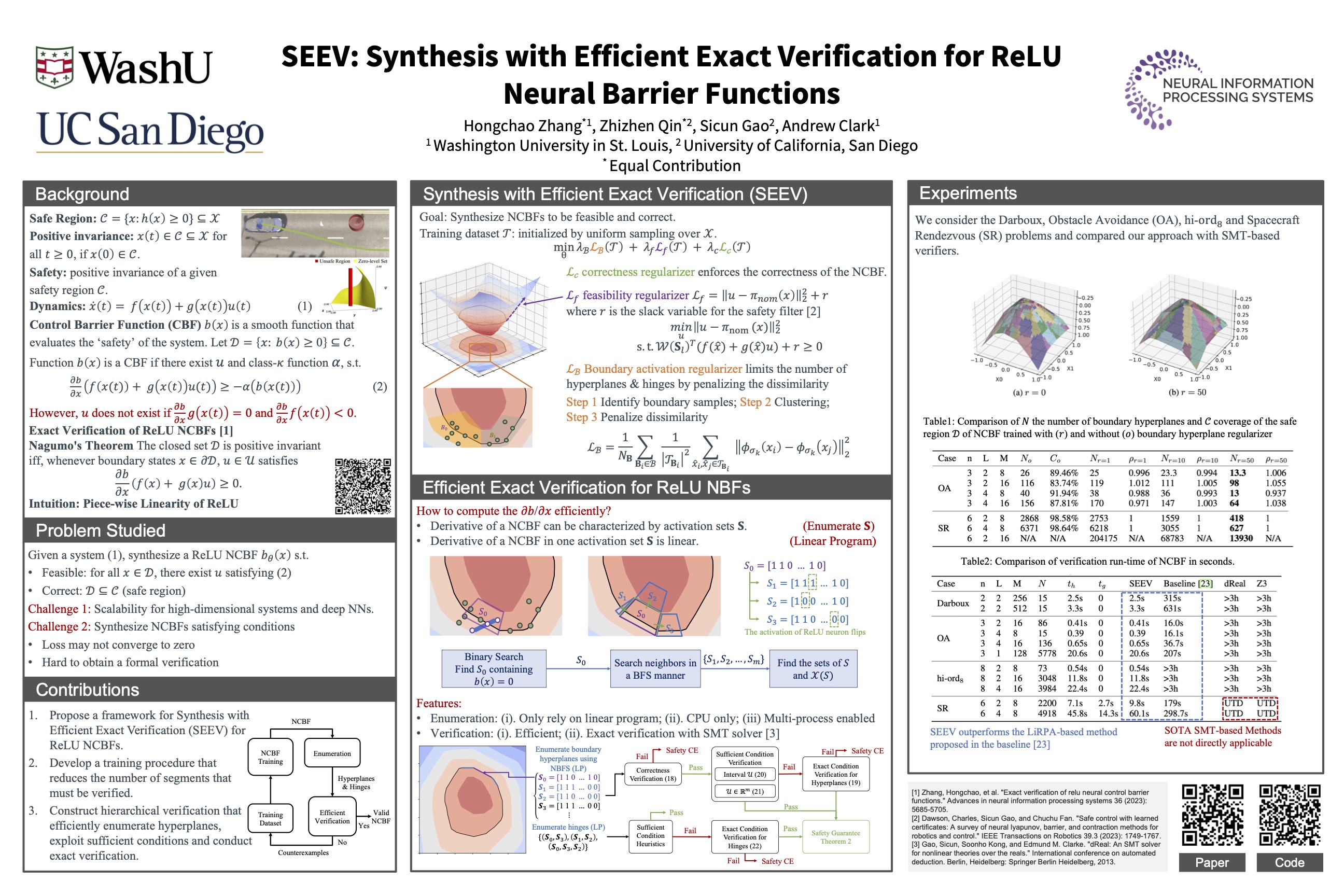

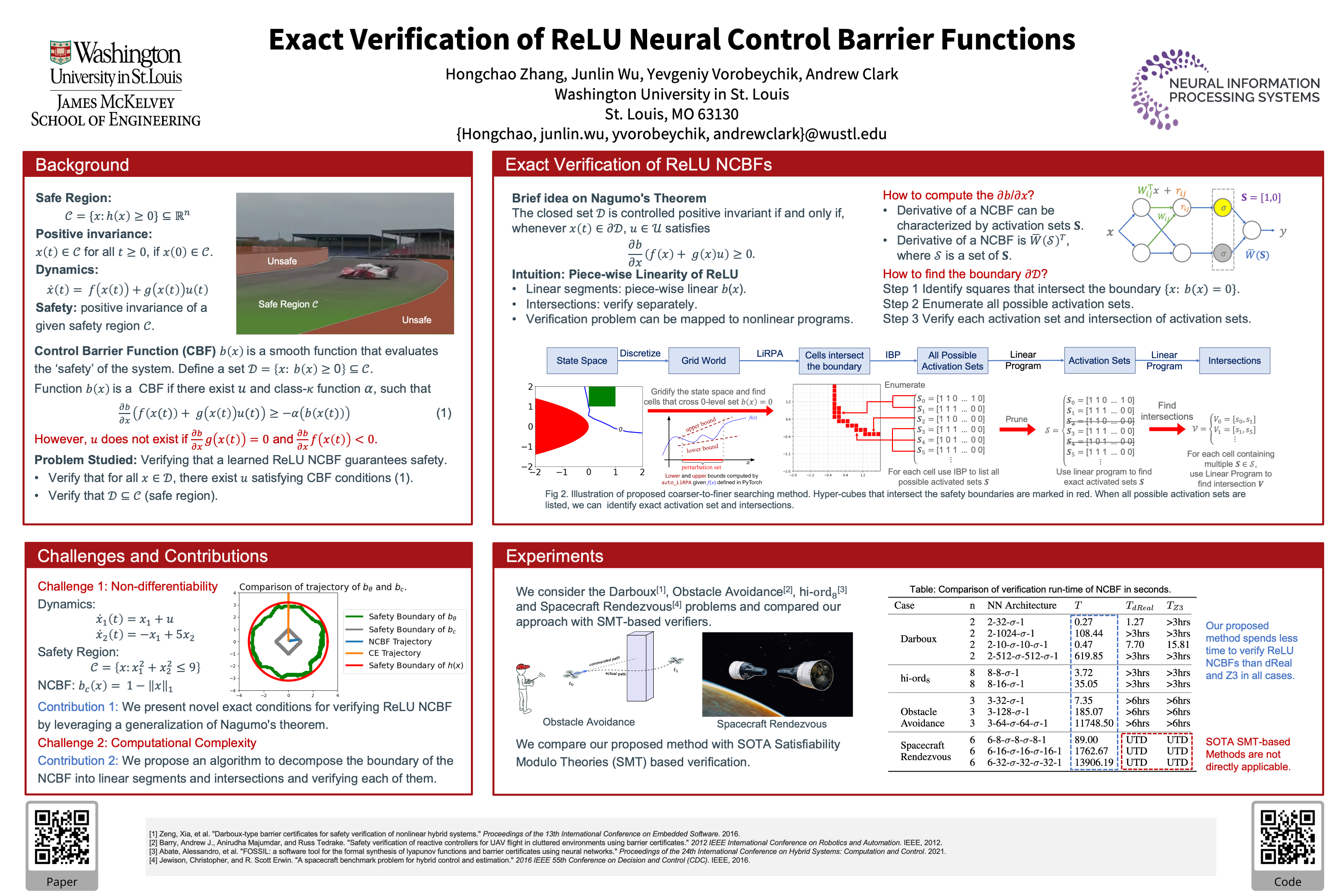

CPS are increasingly integrated with learning-enabled components such as Neural Networks (NNs) to enhance performance and adaptability. Such integration increases the complexity of safety verification due to the lack of transparency and interpretability of the NN components.

The goal of my research is to design trustworthy learning-enabled CPS and ensure continued safe operation. The work consists of two major aspects: (i). safety verification for learning-enabled systems and (ii). safe control policy training with formal guarantees.

Safety verification of learning-enabled CPS is challenging due to the high dimensionality and nonlinear dynamics of the underlying physical system, as well as the complexity of the ML models. Existing approaches mitigate these challenges either by exhaustive search over the state space (which may not scale to high-dimensional systems) or constructing over-approximations of the output space of the NNs, leading to over-conservative verification.